Optimizing Performance in Open Source Data Warehouses: Query Tuning, Data Partitioning, and Caching Strategies

Data warehouses have become the technological backbone of modern analytics, offering organizations a scalable and cost-effective way to process and analyze enormous volumes of data. Organizations can choose from a variety of solutions: from proprietary data warehouses, such as BigQuery and Snowflake, to open source data warehouses, such as Apache Druid™, Apache Pinot™, and ClickHouse. These tools deliver low-latency queries that empower teams to build high-performance analytical applications (for example, dashboards, segmentation tools, and credit scoring) that support both real-time and batch workloads.

Most data warehouses support SQL as the common language for end users to process and retrieve data. However, not all queries are created equal. Without proper tuning, even the most robust warehouse can suffer from slow queries, resource bottlenecks, and escalating costs. Furthermore, without proper configuration, all queries will be expensive.

In this article, you'll learn strategies for optimizing query speed and enhancing resource efficiency in open source data warehouses through query tuning, data partitioning, and caching. Finally, you'll see how integrating your warehouse with a modern lakehouse architecture, powered by Onehouse, can take your analytics to the next level.

Query Tuning

Improving the performance of a data warehouse is usually interpreted as increasing the speed with which it retrieves data. This section explores how to tune and optimize queries through simplification, execution plan analysis, and predicate pushdown.

Query Simplification

While most data warehouses use automatic query optimization engines, they are not a silver bullet. Often, structuring queries adequately can improve how these engines interpret and execute them. Common table expressions (CTEs) are a popular pattern that enables you to reference a temporary result set multiple times in a query, avoiding repeated subqueries or joins. By breaking down nested subqueries into named, logical blocks, CTEs help query optimizers generate more efficient execution plans. This is especially true for hierarchical or recursive queries (e.g. organizational charts), where CTEs streamline traversal logic.

Furthermore, many queries involve correlating two or more tables. By filtering these tables early on, a data warehouse needs to join fewer rows and avoids unnecessary data scans, a technique supported across Apache Druid, Apache Pinot, and ClickHouse.

All major warehouses (Apache Druid, Apache Pinot, ClickHouse) provide tools to inspect query execution plans, helping engineers identify bottlenecks, such as full table scans, inefficient joins, or expensive sort operations. A careful evaluation of the execution plan can offer opportunities for manual improvement that the optimization engine missed.

Indexes

Most data warehouses offer some kind of indexing mechanism. An index is a data structure that enables quick retrieval of records from a database table by storing pointers to the actual data locations. While indexes require extra storage capacity, the speed gains are often significant.

While the principle is always the same, the implementation varies across data warehouse solutions. Apache Druid uses traditional bitmaps (aka inverted indexes), which are created automatically. Apache Pinot offers a star-tree index, which offers a dynamic multi-column index that looks at the usage pattern for each table.

ClickHouse has two main indexing mechanisms. The first can be used only on the primary key and can be specified when creating the table engine. It's known as primary indexing and is typically used to determine the order in which data is stored on disk. They are relevant for optimizing queries that benefit from sorted data, such as time series. The second type, the secondary data skipping indexing, is used to improve the performance of queries by enabling ClickHouse to skip over irrelevant data granules during query execution.

Materialized Views

Like an index, most of today's vendors offer materialized views. A materialized view is a precomputed data set derived from a query and stored physically to accelerate query performance by avoiding repeated computation. Unlike standard views, which execute queries on demand, materialized views typically cache results and refresh them periodically or incrementally, computing the results at the time of writing or at a scheduled time instead of at the time of reading.

Apache Druid supports materialized views via aggregated data sets (or rollups), which optimizes queries by rewriting them to use pre-aggregated data. They are ideal for speeding up complex `GROUP BY` or time-series queries on large data sets with high dimensionality.

Apache Pinot provides materialized views through upsert-enabled tables. When a primary key is defined, Apache Pinot automatically retains only the latest record version, eliminating the need for downstream logic to filter duplicates. When looking up a record in Apache Pinot, you need to provide a `WHERE` clause only with the primary key. Apache Pinot returns only the latest version.

ClickHouse has two types of materialized views: incremental materialized views and refreshable materialized views. The former acts as an insert trigger, populating a target table with results from a `SELECT` query whenever data is inserted into the source table. The latter is a more traditional approach: it's a periodic execution of a query over a full data set, resulting in a target table that can be queried quickly.

Data Partitioning

Data partitioning organizes data sets into smaller, manageable segments based on specific values of a partition field (usually this is the date, but for specific use cases this can be the organization or device ID). This enables systems to scan only relevant partitions during queries. This reduces input and output at query time, drastically accelerating performance, especially at query time.

However, choosing an inadequate partition field might even decrease performance. For this reason, it's worth taking some time to decide on it.

Best Practices for Partition Fields

There are two best practices for setting your partition fields:

- Ensure the partition field matches the usage pattern of each table: Choose partition fields that match common query filters (e.g. `timestamp` for time-series analytics or `org_id` for organization-specific queries).

- Avoid data skew: Data skew occurs when data is unevenly distributed across partitions in a distributed system, leading to some partitions holding significantly more data or processing load than others. This imbalance undermines the efficiency of parallel processing as tasks handling skewed partitions take longer to complete, creating bottlenecks that delay overall job execution. High-cardinality partition fields, such as user IDs, typically ensure even data distribution, but it can cause over-partitioning and create small-file issues.

Partitions Improvement

Most tools optimize partitions out of the box, but there might be some tweaks here and there. A popular technique is salting, which involves adding a random or hashed salt to partition field values before performing joins or aggregations, effectively distributing data more evenly across partitions and mitigating performance bottlenecks. Although not a standard feature, it can easily be applied in Apache SparkTM using custom logic.

Finally, there are some vendor-specific techniques for improving partitions. For example, ClickHouse suggests aligning primary indexes (supra) with partition fields. Vendors, such as Onehouse, have specific features (LakeView) to warn users when some tables are extremely skewed and when these tables need to be repartitioned.

Data Sharding

Data sharding is another powerful technique for improving data warehouse performance by horizontally partitioning large data sets across multiple servers or nodes, with each subset known as a shard. Sharding distributes both storage and query workloads, enabling parallel processing—so queries can be executed simultaneously across shards, dramatically reducing response times and enhancing throughput for both reads and writes. New shards can be added seamlessly to accommodate growing data volumes and user loads, preventing single-server bottlenecks and ensuring stable performance even as demand increases.

Although both shards and partitions represent subsets of data, they differ in that a single shard can contain multiple partitions.

Sharding support varies across systems. ClickHouse randomly distributes data across shards. Apache Druid and Apache Pinot, in contrast, use the concept of segments: time-based subsets of table data that scale horizontally as needed for both storage and computation. Interestingly, there are some trade-offs regarding segment size, which can be configured at the time of ingestion when using real-time data streams.

Caching

Caching in data warehouses is a performance optimization technique that stores frequently accessed data or precomputed results in high-speed storage layers to minimize redundant computation and reduce query latency. By serving repeated requests directly from the cache, warehouses avoid costly operations and reprocessing, drastically improving resource efficiency.

ClickHouse has a built-in query cache that enables the results of `SELECT` queries to be stored and reused for subsequent identical queries. This cache is transactionally inconsistent (results may not always reflect the very latest data changes), making it well-suited for online analytical processing (OLAP) workloads where slight staleness is acceptable. Like most of ClickHouse's features, its caching is highly configurable.

Apache Pinot does not offer caching mechanisms. However, since early 2025, it has expression reuse, which enables identical subqueries to bypass reprocessing by fetching the precomputed results from memory.

Apache Druid has two major caching mechanisms. Per-segment caching stores partial query results for each segment, and whole-query caching is for storing the complete results of individual queries. The former is tailored for queries that request real-time ingested data, while the latter is aimed at repeatedly querying batch data.

A typical use case that heavily relies on caching mechanisms is a real-time user activity dashboard. These dashboards rely on real-time data (events, such as clicks and cart additions), supplemented by historical batch loads (such as user demographics).

Combining Optimization Strategies

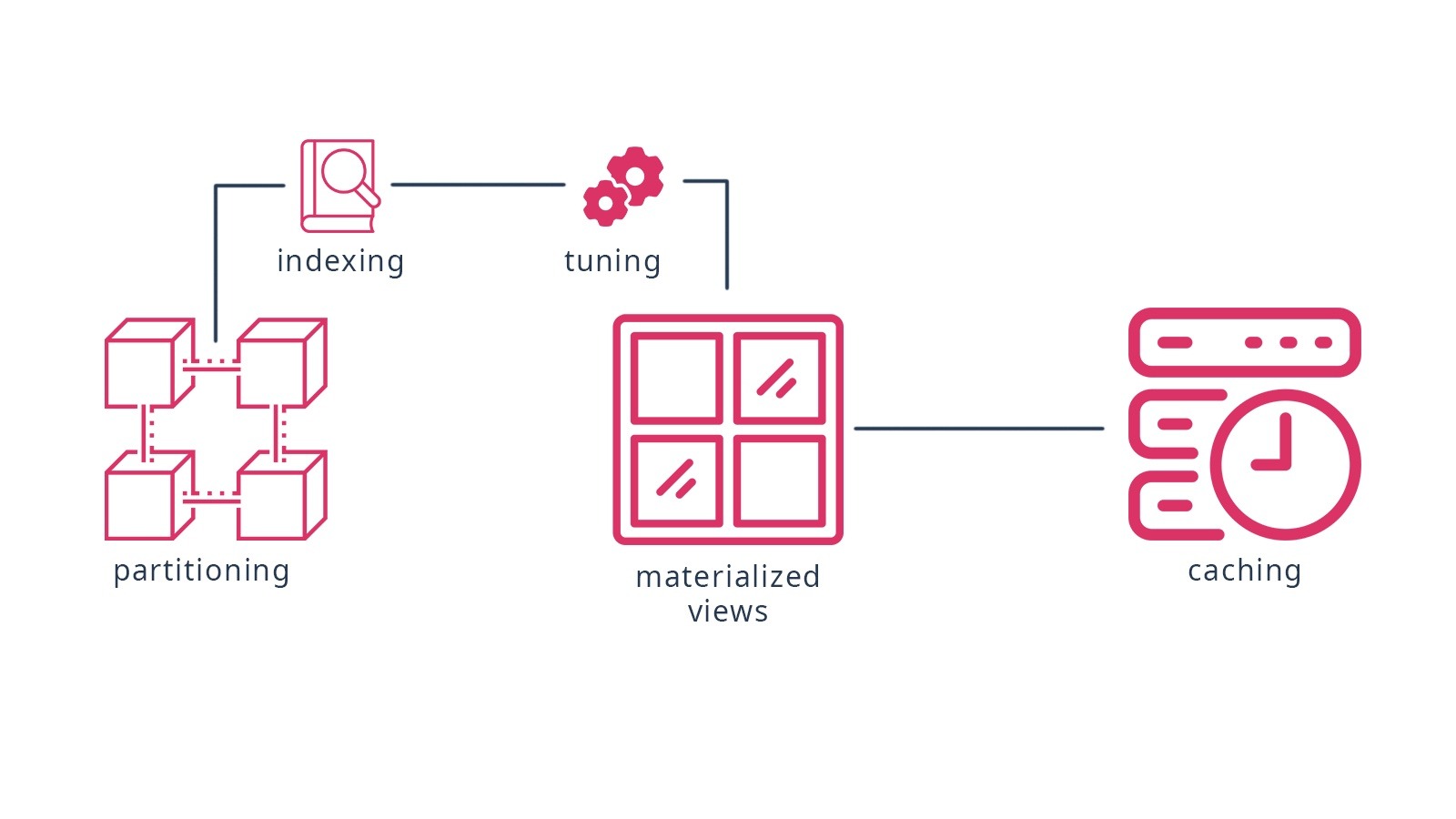

Query tuning, indexing, materialized views, partitioning, and caching form a cohesive optimization strategy by addressing different layers of the data pipeline:

- Query tuning ensures execution plans leverage available resources efficiently, while indexing minimizes data scans.

- Materialized views reduce computational overhead for repetitive aggregations.

- Partitioning limits input by pruning irrelevant data blocks.

- Caching eliminates redundant computation for frequent queries.

Furthermore, these techniques amplify each other. Here's an example of how they would work together in a fraud detection use case:

- Caches can permanently hold the data of products that are often part of fraudulent transactions.

- Partitioning narrows the data set. Partitions for each transaction date and region make indexes and materialized views more effective.

- An index on customer number can be used for quickly looking up a user's profile that is suspected of fraud.

- Tuned queries leverage indexes and partitions to skip non-essential data.

- Caching benefits from optimized query paths and storing prefiltered results.

Beyond Warehouse Tuning: The Lakehouse Advantage

While the warehouses mentioned earlier—Apache Druid, Apache Pinot, and ClickHouse—excel at low-latency querying, they often struggle with high-velocity data ingestion and real-time updates due to their columnar storage format. Every single update results in a complex pipeline of operations for updating and appending data making them less suited for handling streaming data. For example, ClickHouse's sparse indexing accelerates time-series queries but falters with frequent updates, while Apache Pinot's real-time segments require manual tuning to avoid metadata overload.

This is where a lakehouse becomes transformative. A lakehouse unifies the scalability of a data lake with the performance of a warehouse, giving teams a single foundation for both batch and real-time analytics. Built on open table formats such as Apache Iceberg™ and Apache Hudi™, a lakehouse makes it possible to ingest high-velocity data streams and apply incremental updates without reprocessing entire datasets. The result is analytics powered by fresh data, without the fragile pipelines and heavy operational overhead of traditional warehouses.

That foundation gets even more powerful with Onehouse, the universal, fully managed data lakehouse. Onehouse delivers all the advantages of a lakehouse while removing the complexity of building and maintaining one yourself. With Onehouse, you also get:

- Automated optimizations with Table Optimizer: Continuous background compaction, clustering, and cleaning that reduce manual ops work and keep tables tuned for performance.

- Advanced indexing with the Onehouse multi-modal index: Beyond traditional file and column pruning, this index layer accelerates queries across diverse workloads.

- Open data and freedom of choice with XTable and Multicatalog Sync: Support all leading table formats and sync your data to any catalog, allowing you to use the best tool for the job, whether interactive SQL, batch ETL, or real-time streaming, without lock-in.

- Improved performance and reduced cost with OCR and Quanton: The Onehouse Compute Runtime provides a Spark runtime that is optimized for lakehouse workloads, and the Quanton engine reduces ETL pipeline costs by 2-3x compared to other Spark engines.

Interested in bringing your warehouse to the lakehouse? Try Onehouse now.

Read More:

Subscribe to the Blog

Be the first to read new posts