Apache Kafka® (Kafka Connect) vs. Apache Flink® vs. Apache Spark™: Choosing the Right Ingestion Framework

Data ingestion frameworks are an important component of modern data pipelines. They enable organizations to collect, process, and analyze vast amounts of data from diverse sources. They essentially help transport data from its sources to centralized repositories, such as data lakes or warehouses, where it can be further processed and analyzed for insights and decision-making.

Ingestion is typically done in two primary phases: extracting data from various source systems and loading it into target destinations such as data lakes, warehouses, or analytics platforms. Different tools are optimized for different aspects of this ingestion process. Some specialize in the extraction phase, efficiently capturing data changes from source systems, while others excel in the loading phase, handling the complexities of delivering data to various storage and processing systems. The choice depends on many factors, including the type of data you’re working with, the required processing mode (batch or real-time), scalability needs, and integration requirements with other systems.

This article compares three leading data ingestion frameworks: Kafka Connect, Apache Flink, and Apache Spark. It provides a brief introduction to each framework before evaluating them based on performance, scalability, ease of integration, and extraction and loading capabilities.

Overview of Kafka Connect, Apache Flink, and Apache Spark

While they're all often used for data ingestion tasks, these three tools' strengths lie in different aspects of data movement and processing. One of the key ways they differ is in how they handle Change Data Capture (CDC), which has become increasingly important in modern ingestion pipelines.

CDC allows ingestion frameworks to capture row-level changes (such as inserts, updates, and deletes) from transactional databases in real time. This continuous capture means your downstream systems always reflect the latest state of the source, without waiting for scheduled batch jobs. As a result, CDC enables fresher data, supports real-time analytics, and reduces the overhead of full-table exports on production systems. If your use case demands up-to-date views, event-driven applications, or low-latency syncing, CDC support should be a key factor when choosing your ingestion framework.

Now let's look at each tool in detail, including how they approach the CDC challenge.

Kafka Connect is a framework for integrating Kafka with external systems such as databases, key-value stores, file systems, and other messaging systems. Kafka Connect offers:

- Source connectors (for example, via Debezium) to capture change data capture (CDC) events from transactional databases,

- Sink connectors to write data to lakehouses and other systems like S3, Snowflake, BigQuery, and Delta Lake. Kafka Connect is ideal for reliable data movement at scale, but it performs no processing—it is strictly focused on data extraction and delivery.

Apache Flink is a distributed processing engine designed for both batch and real-time data processing. It excels in real-time data processing, offering low-latency performance and flexible state management. In the context of data ingestion,

- It provides the Flink CDC source connector to ingest CDC data directly from databases without Kafka.

- It can also write streaming data to lakehouses and analytical sinks with fine-grained control over event time, state, and consistency.

Flink is especially suited for building low-latency, event-driven pipelines that require complex state management or in-stream processing before loading.

Apache Spark is a general-purpose cluster computing framework that supports a wide range of data processing tasks, including batch processing, real-time streaming, and machine learning. Originally developed to replace Apache Hadoop®, Spark now has a mature and extensive ecosystem with support for multiple programming languages, libraries, and tools. It supports:

- Batch ingestion from various data sources but does not support CDC natively. You can implement CDC-style pipelines using Spark by combining it with tools like Debezium and Kafka for change capture, but if you're using Spark alone for extraction, it will operate in batch mode.

- Batch writes to lakehouses using formats like Delta Lake, Apache Iceberg™, or Apache Hudi™

- Spark Structured Streaming for writing event streams, though it operates on a micro-batch model and may introduce higher latencies compared to Flink. Spark is best suited for workloads that prioritize scalability, transformation complexity, or integration with machine learning and SQL-based analytics workflows.

One common challenge with Spark ingestion is cost efficiency: most production Spark jobs waste 30–70% of allocated compute due to idle executors, shuffles, and inefficient autoscaling. Tools like Cost Analyzer for Apache Spark™make it easy to spot this waste.

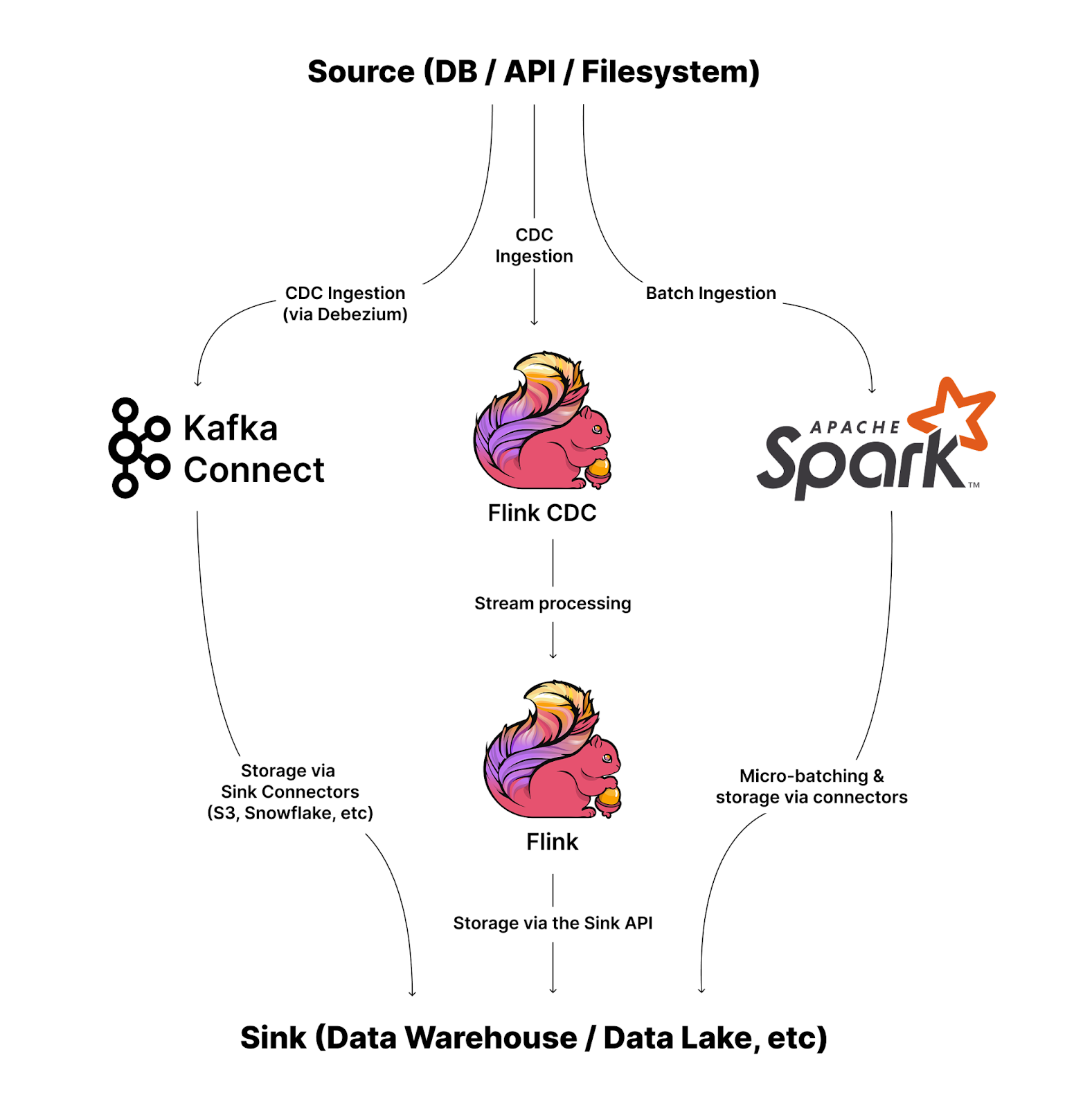

Here’s an example of how these could fit together in a pipeline:

Kafka Connect and Flink CDC are both commonly used at the initial extraction stage. Kafka Connect supports CDC through Debezium and can also deliver data directly to sinks like S3 or Snowflake using sink connectors, without the need for additional processing layers. Flink CDC similarly connects directly to databases and enables real-time extraction and transformation through stream processing jobs, before writing to downstream systems.

Spark, on the other hand, is best suited for batch ingestion from a variety of sources, including databases, APIs, and file systems. It can also consume micro-batched streams and perform complex transformations before writing to sinks such as data warehouses or lakehouses.

Each tool can handle both ingestion and loading responsibilities based on your needs. You can build end-to-end pipelines with Flink or Spark alone, or combine these tools selectively depending on latency, transformation, and integration requirements.

Performance and Scalability

Performance and scalability are two of the most important factors to consider when choosing a data ingestion framework, as they determine its ability to handle large data volumes and adapt to changing demands. Performance is measured by latency and throughput, while scalability depends on the data model and scaling options. Optimization and tuning features also play a role in maximizing efficiency.

Latency and Throughput

When evaluating data ingestion frameworks, ingestion latency (the time taken to capture data changes from source systems and deliver them to downstream targets) and throughput (the volume of data processed over time) are critical metrics. Here's how Kafka Connect, Flink CDC, and Apache Spark compare:

- Kafka Connect, often used with Debezium for CDC, is designed for scalable and reliable data movement. While it doesn't process data itself, its ingestion latency is influenced by connector configurations and the performance of source and sink systems. In practical scenarios, Kafka Connect with Debezium can achieve ingestion latencies in the range of hundreds of milliseconds to a few seconds, depending on system tuning and load. Its throughput capabilities are robust, but optimizing buffer sizes and batch configurations is essential for minimizing latency.

- Flink CDC enables direct streaming of change events from databases to downstream systems without the need for an intermediate Kafka cluster. This architecture allows for lower ingestion latencies, often achieving sub-second delays, making it suitable for real-time data synchronization tasks. Flink's architecture supports high throughput and low latency, scaling to thousands of cores and terabytes of application state. If you use it with Debezium, though, you will again see similar performance figures as with Kafka Connect and Debezium, as Debezium becomes the bottleneck.

- Apache Spark operates on a micro-batch processing model, with stream-processing latency currently around 150-250 ms. With Spark, you can process large volumes of data (up to 1 million events/sec) with the right cluster configuration and workload characteristics. However, if you’re using Spark directly for ingestion, without upstream CDC tools like Debezium, Kafka, or Flink, you’ll typically be pulling data in scheduled batch jobs, often every few hours. This leads to significantly worse data freshness compared to streaming CDC pipelines, making Spark less suitable for real-time use cases where low-latency data is critical.

When it comes to latency and throughput, Flink CDC stands out for its low latency, making it ideal for real-time analytics and event-driven processing. Spark, with its micro-batch model, offers slightly higher latency but excels in handling high-throughput workloads efficiently. Kafka Connect, while not a processing framework by itself, enables reliable and scalable data movement, but its performance is largely dependent on connector configurations and external system capabilities.

Scaling Options

All three frameworks support horizontal scaling, which is essential for accommodating increased data volumes and processing demands. Here’s how the three compare against each other when it comes to scaling options:

- Kafka Connect is designed for horizontal scalability. It's well-suited for steady, high-throughput pipelines and scales effectively in distributed environments. However, scaling is static and configuration-driven; in modest clusters with typical connector workloads, throughput is often on the order of tens to hundreds MB/s with proper tuning but doesn’t respond dynamically to load changes. It also depends heavily on Kafka cluster health and partitioning, which can be a bottleneck if not properly configured.

- Flink CDC, unlike Kafka Connect, is built on Apache Flink’s streaming runtime, which offers fine-grained scaling. It achieves fine-grained parallelism by splitting ingestion pipelines into operator subtasks, allowing ingestion throughput to scale with available cluster resources. While automatic scale-in is supported during incremental sync phases, scaling up generally requires a manual adjustment of parallelism or a pipeline restart. Flink’s advanced backpressure management and checkpointing ensure ingestion pipelines stay responsive under load. In terms of throughput, Flink CDC can handle hundreds of thousands of events per second in modest clusters, depending on the source system's performance and sink characteristics. It's particularly well-suited for bursty or variable workloads, where scaling and fault tolerance are important.

- Spark offers strong horizontal scaling for both batch and streaming ingestion workloads, but its micro-batch model makes it less agile in reacting to changing volumes in real time. Scaling up is straightforward: larger clusters and wider parallelism improve throughput. But scaling down can introduce delays or uneven data distribution due to Spark’s bulk synchronous processing (BSP) model. This scale-down inefficiency is one of the main reasons Spark clusters often run ‘hot and idle’ at the same time. Our experience analyzing thousands of workloads shows this can inflate costs by 2–3x — making cost optimization tooling and smarter autoscaling essential for large-scale Spark deployments. Spark Structured Streaming can generally process up to one million events per second in stable conditions, but its compute overhead is higher compared to Flink, and tuning is often required to achieve sustained throughput under load.

In summary, Flink CDC scales the most dynamically, offering fine-grained parallelism and responsive resource management through Flink’s operator-based architecture. It's best suited for variable and bursty workloads where adaptive scaling and consistent low-latency performance are essential. Spark scales efficiently to very large workloads in both batch and streaming modes, but its bulk synchronous model introduces latency and overhead when scaling down or responding to uneven load. Kafka Connect supports high ingestion throughput via horizontal task distribution, but its scaling is static; while it can scale to high levels with manual configuration, it lacks the dynamic elasticity of Flink CDC.

Optimization and Tuning Options

Each framework offers different optimization options to enhance performance:

- Kafka Connect’s ingestion performance is largely determined by connector configurations. Key tuning parameters include batch size, poll interval, flush size, and task parallelism. You can optimize these settings to reduce latency and improve throughput, especially when paired with a high-performance Kafka cluster. Sink connectors may also benefit from tuning write intervals, retry policies, and batching logic depending on the target system. However, Kafka Connect remains fundamentally configuration-driven. It offers limited built-in intelligence for dynamic performance tuning or resource optimization.

- Flink CDC builds on Apache Flink’s optimization model but introduces its own set of tuning considerations specific to CDC-based pipelines. While Flink’s cost-based optimizer and streaming planner aren't directly applicable to CDC jobs (which are more operational than analytical), Flink CDC pipelines benefit heavily from fine-tuning around state management, checkpointing, and parallelism. Other key controls include incremental snapshot settings, buffer timeout, and network memory. So, Flink CDC offers deeper control over ingestion behavior but requires careful tuning to align fault tolerance, throughput, and latency across a distributed environment.

- Spark uses the Catalyst Optimizer, which is great at optimizing data transformation and processing queries. It integrates the Tungsten execution engine to improve framework performance. However, Spark’s optimization is more geared towards batch processing tasks, where it can leverage in-memory computing capabilities to accelerate data processing. Performance tuning in Structured Streaming typically involves adjusting batch intervals to balance latency and processing cost, as well as managing shuffle partitions and memory usage.

To sum up, as mentioned previously, Flink excels in real-time stream processing and offers techniques to optimize it as well. Spark optimizes batch and micro-batch workloads with Catalyst and Tungsten, and Kafka Connect supports fine-tuning data movement efficiency through configurable parameters.

Ease of Integration

Your data ingestion framework also needs to be able to easily integrate with other systems. This includes support for diverse data sources, compatibility with various programming languages, and the availability of comprehensive documentation and community support.

Integration with Data Sources

The more data sources a tool supports, the better it is for diverse data ingestion tasks.

- Kafka Connect supports a wide variety of source systems, including CDC through Debezium connectors (for example, MySQL, PostgreSQL, SQL Server, MongoDB). For writing data, Kafka Connect offers sink connectors to cloud storage (for example, S3, GCS), data warehouses (for example, Snowflake, BigQuery), and some file-based destinations. Kafka Connect can also write to Delta Lake, Apache Hudi, and Apache Iceberg using officially supported or community-maintained sink connectors. Both Iceberg and Hudi provide native Kafka Connect sinks that support features like upserts, schema evolution, and transactional writes. Therefore, Kafka Connect can integrate directly with modern lakehouse tables, without requiring additional processing frameworks for basic ingestion tasks.

- Flink CDC integrates directly with CDC-enabled databases and does not require Kafka as an intermediary. On the output side, Flink CDC (via Apache Flink’s connectors) can write natively to Apache Hudi, Apache Iceberg, and Delta Lake using dedicated format-aware sinks. This makes it one of the most flexible options for streaming directly into lakehouse tables with low latency.

- Apache Spark supports a broad range of input sources, including databases, message brokers like Kafka, and file-based systems. Spark provides first-class support for all three major lakehouse formats: Apache Hudi, Apache Iceberg, and Delta Lake. It integrates tightly with these formats via built-in connectors and supports both batch and streaming writes. Spark also offers advanced features like time travel, schema evolution, and merge operations that are essential for managing evolving lakehouse tables.

In terms of source coverage, Kafka Connect leads with its extensive plugin ecosystem. For direct, Kafka-free CDC pipelines into lakehouses, Flink CDC is better suited. Spark offers the broadest integration surface across both batch and streaming pipelines with first-class support for lakehouse formats.

Language Support and APIs

The number of supported languages and APIs can significantly impact the development experience, especially for teams working in multiple programming environments.

- Kafka Connect supports integration through its REST API and CLI, allowing developers to manage connectors programmatically. However, it doesn't provide native support for multiple programming languages beyond its core Java implementation.

- Flink CDC inherits Flink’s multi-language APIs, including support for Java, Scala, and Python (via PyFlink). The Table API and SQL interface make Flink CDC particularly approachable for developers building streaming pipelines who don’t want to dive into low-level stream logic.

- Spark also supports multiple programming languages, including Java, Scala, Python, and R. Its comprehensive API set includes DataFrames, data sets, and RDDs, making it versatile for diverse data processing tasks.

Both Flink CDC and Spark offer a wide range of language support and APIs, making them suitable for developers working in multiple languages. However, Spark’s broader ecosystem and more mature API set give it a slight edge in terms of versatility.

Documentation and Community Support

Detailed documentation and an active, growing community ensure that a tool is easy to adopt and continues to evolve alongside data processing technologies.

- Kafka Connect benefits from the extensive Apache Kafka ecosystem, which includes comprehensive documentation and a large community of developers contributing to its growth.

- Flink CDC has grown rapidly in adoption and now has strong community backing, especially as part of the Apache Flink ecosystem. While smaller than Spark’s, the Flink community is highly active, with regular releases and extensive documentation on state handling, fault tolerance, and connectors.

- Spark boasts one of the largest and most active open source communities, with over 2,000 contributors and more than 30,000 commits on GitHub. It releases updates frequently, ensuring that the framework stays current with evolving data processing needs.

Spark has the largest and most active community, with extensive documentation and frequent updates. Flink also has a strong community, though smaller than Spark’s. Kafka Connect benefits from the broader Kafka ecosystem but has a relatively smaller community size compared to Spark and Flink.

How Do You Choose the Right Ingestion Framework?

Finally, which data ingestion framework is the best fit depends on your specific use case and the type of ingestion required. Here are some example scenarios to guide your decision.

Extracting Data from Transactional Databases

Depending on whether you're ingesting full datasets periodically (in batches) or capturing changes continuously, the right tool will vary.

Batch-Based Ingestion

If you're periodically extracting full datasets, like scheduled exports or snapshot-based ETL jobs, Apache Spark is the best choice. It’s optimized for high-throughput, batch-style data ingestion and transformation. Spark is particularly effective when:

- Your source systems don’t support change data capture

- You’re working with large historical datasets

- You need to perform extensive data enrichment and transformation

Spark offers wide language support through PySpark (Python), Scala, and Spark SQL, making it a good option for both data engineers and analysts.

CDC-Based Ingestion

When you need to capture real-time row-level changes, CDC-based ingestion is a more suitable strategy. In this case, you can use either Flink CDC or Kafka Connect based on your requirements.

Kafka Connect and Debezium provide a production-ready solution to stream database changes into Apache Kafka topics. It supports a variety of source systems and scales horizontally. This is a strong choice if you already operate a Kafka cluster and want to feed multiple consumers (such as S3, Snowflake, and Elasticsearch). That said, Kafka infrastructure is a prerequisite, and teams need to be familiar with Java, Kafka internals, and connector operations.

Flink CDC, in contrast, lets you ingest CDC streams directly from databases without Kafka. It reads changelogs and supports real-time processing and direct delivery to sinks like MySQL, Elasticsearch, or even warehouses. It simplifies your architecture and provides reduced latency, especially when you don’t need to fan-out to multiple systems or persistent message queues. It’s an excellent fit when you want to filter, transform, or enrich change data on the fly or when building stateful applications and real-time views based on change streams.

Flink CDC is also preferable in resource-constrained environments or edge deployments where maintaining a Kafka cluster is overkill. While Flink is built with Java/Scala, it also provides SQL and Table APIs that reduce the barrier to entry for data engineers with SQL fluency.

Loading Event Streams into Analytics Systems

For high-traffic use cases such as analytics systems, Flink CDC is a great fit due to its ability to combine change data capture with real-time processing in a single, integrated flow. It can directly capture changes from databases and apply in-stream filtering, enrichment, or windowed aggregations before loading the results into analytics systems such as Elasticsearch, Apache Pinot™, ClickHouse, or data warehouses.

Flink CDC builds on Apache Flink’s state management, event-time processing, and low-latency streaming engine. It provides prebuilt connectors and pipelines for CDC use cases while retaining Flink’s full-stream processing capabilities.

In contrast, Kafka Connect is not a processing tool; it’s an integration framework. It can stream CDC data into Kafka topics and then deliver those streams into analytics sinks via sink connectors (for example, Kafka Connect to ClickHouse or S3). However, it provides limited control over how data is written and lacks features for coordinating writes across partitions, handling deduplication, or managing consistency guarantees.

Spark Streaming can be used for near real-time analytics, especially when integrated with structured data platforms or for micro-batch processing of CDC streams. However, it typically introduces more latency than Flink CDC and is less suited for use cases requiring precise event-time alignment or continuous stateful operations.

Moving Data to a Lakehouse

Modern data lakehouses serve many roles in a data platform. While they can act as long-term, cost-effective storage, they are increasingly used for direct analytics and reporting, where data freshness and latency matter. Choosing the right ingestion framework depends on whether you're optimizing for real-time analytical access or efficient batch backfills and long-term persistence.

Real-Time Analytics on Fresh Data

If your lakehouse is used for dashboards, real-time reporting, or streaming analytics, data freshness is critical. In these scenarios, Flink CDC is a strong choice. It captures changes from transactional databases as they happen and can apply lightweight, in-stream transformations before writing to formats like Apache Hudi, Delta Lake, or Apache Iceberg. This makes it well-suited for incrementally updating lakehouse tables with minimal delay, supporting low-latency queries without frequent full refreshes.

Kafka Connect can also stream CDC data into lakehouses using sink connectors. It provides flexibility through Single Message Transforms (SMTs), which allow for lightweight, record-level modifications like filtering fields or adjusting data types in-flight. While its default delivery guarantee is at-least-once, many modern connectors can be configured for exactly-once semantics, making it a reliable option for many pipelines. However, it is not designed for complex, stateful processing such as joins or aggregations, limiting its flexibility compared to a full stream processing framework.

Batch Backfills and Long-Term Storage

When you're ingesting historical data, performing large backfills, or building offline datasets for machine learning and exploration, Apache Spark is often the most appropriate tool. Its batch processing engine efficiently loads large volumes of data from various sources (databases, file systems, APIs) and transforms them at scale. Spark also supports all major lakehouse formats (Delta Lake, Hudi, Iceberg) with features like time travel, schema evolution, and optimized file layout, making it ideal for building long-term datasets with complex transformations.

While Flink and Kafka Connect can also write to lakehouses, they are less suited for bulk data movement or workloads that require joins across multiple historical sources. In these use cases, Spark’s mature transformation engine and cost-based optimizer offer significant advantages.

Summary: Choosing the Right Framework Based on Your Use Case

Here's an overview table with recommendations for when to use the three tools:

Conclusion

To sum things up, choosing the right data ingestion framework—Kafka Connect, Apache Flink, or Apache Spark—depends on specific use cases and requirements. Kafka Connect excels in database integrations and real-time messaging, while Apache Flink is ideal for low-latency, real-time processing. Apache Spark offers comprehensive batch processing capabilities with real-time support.

Each framework’s strengths in performance, scalability, and ease of integration should guide your decision. Kafka’s high-throughput messaging and Flink’s event-time processing make them suitable for real-time applications. Spark’s mature ecosystem supports diverse data processing tasks, making it versatile for both batch and real-time analytics.

If Spark is part of your ingestion strategy, don’t overlook the cost angle. Most teams can uncover hidden inefficiencies in minutes with Cost Analyzer for Apache Spark™ and then take advantage of Quanton for guaranteed 50%+ savings on Spark workloads.

Read More:

Subscribe to the Blog

Be the first to read new posts