Data Architecture Survey Report: The Lakehouse Is Your Data Foundation for AI

If you're a data engineer or architect wondering whether your organization is moving fast enough on AI-enabling infrastructure, your peers aren't waiting anymore.

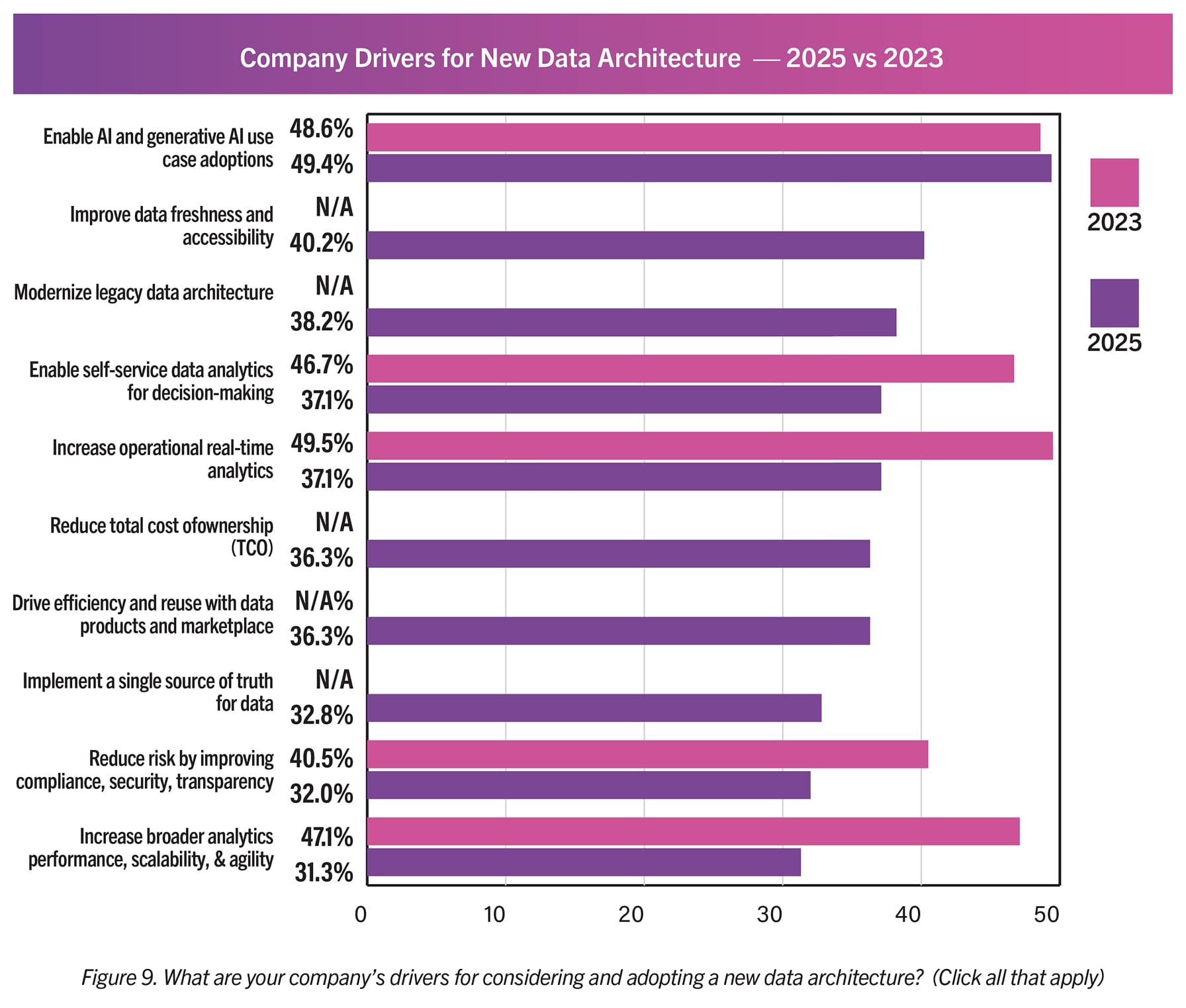

Fresh research from Radiant Advisors surveying 259 qualified enterprise data professionals shows that AI and GenAI use case adoption now drives 49.4% of all data architecture investments. It's the primary business justification for data infrastructure modernization.

More than 85% of organizations have secured budgets, 82.6% are implementing by year-end, and the Data Lakehouse is the strategic foundation.

The Market Is Centered on AI, and the Lakehouse is the Data Foundation for It

"Enable AI and Generative AI use case adoptions" leads business drivers at 49.4%—AI is the #1 driver for new data architecture investments. Your peers are no longer optimizing for speed alone; they are re-architecting for intelligence.

Companies are modernizing their data stack to support AI, and a flexible lakehouse provides the essential foundation for that transformation. For an integrated AI stack, the Data Lakehouse architecture has a strong position at 33.6% strategic value as the flexible storage foundation.

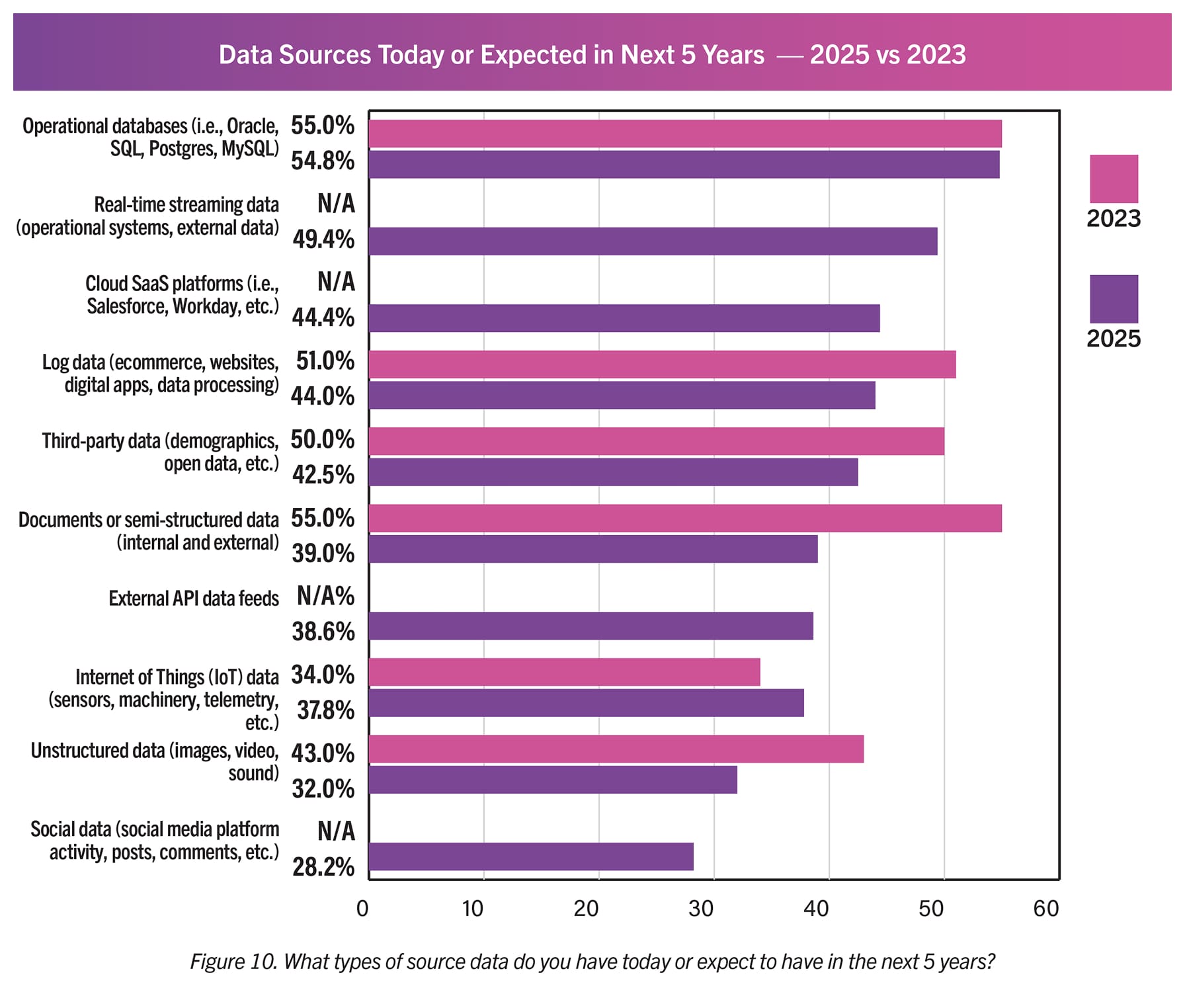

A Data Lakehouse isn't just one option among many—it's the strategic architectural core required to handle the diverse data formats and flexible processing that AI demands. Organizations expecting real-time streaming data (49.4%), Cloud SaaS platforms (44.4%), and external API feeds (38.6%) need the speed, scale, cost-efficiency, and interoperability that traditional warehousing can't provide.

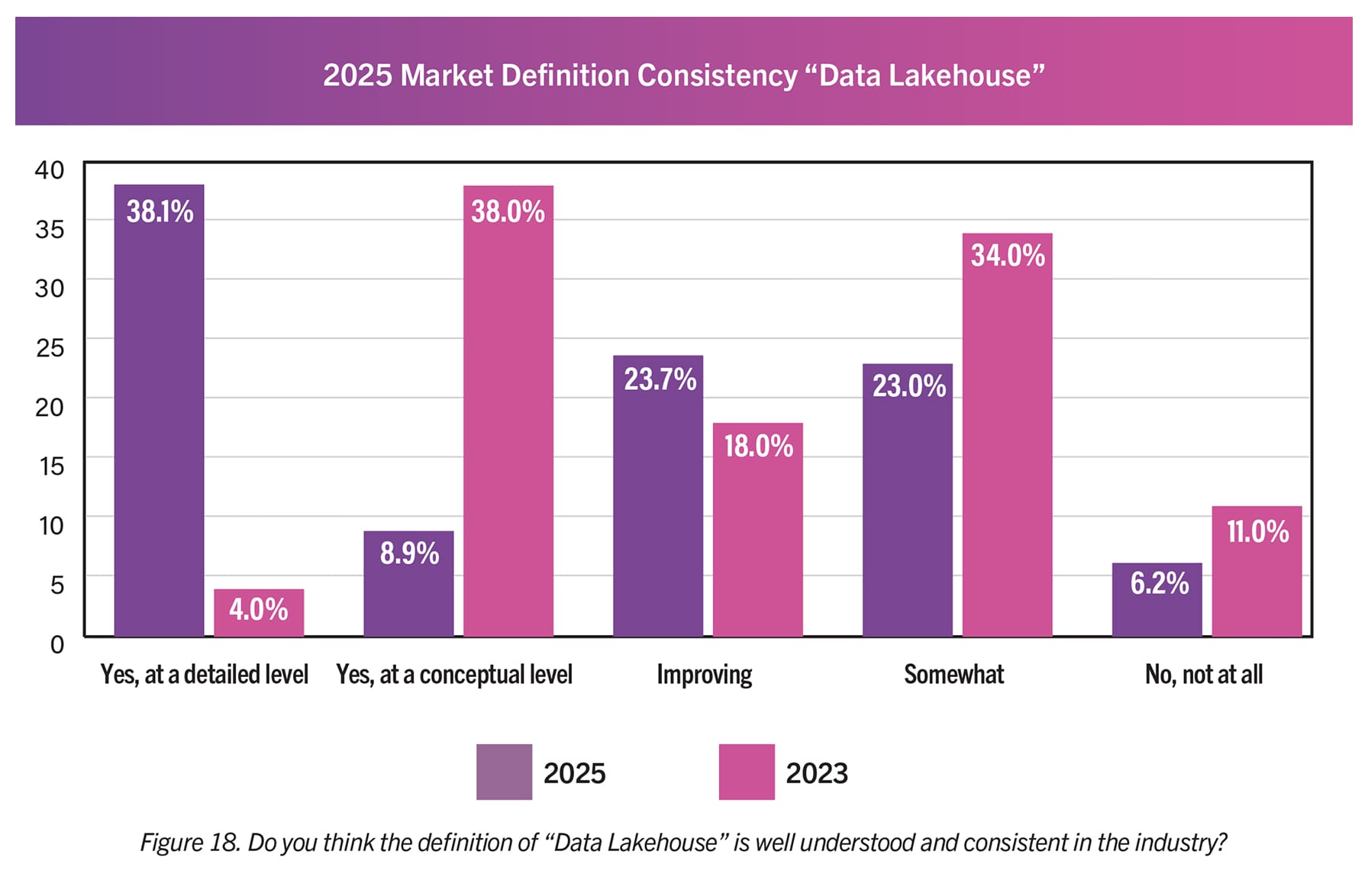

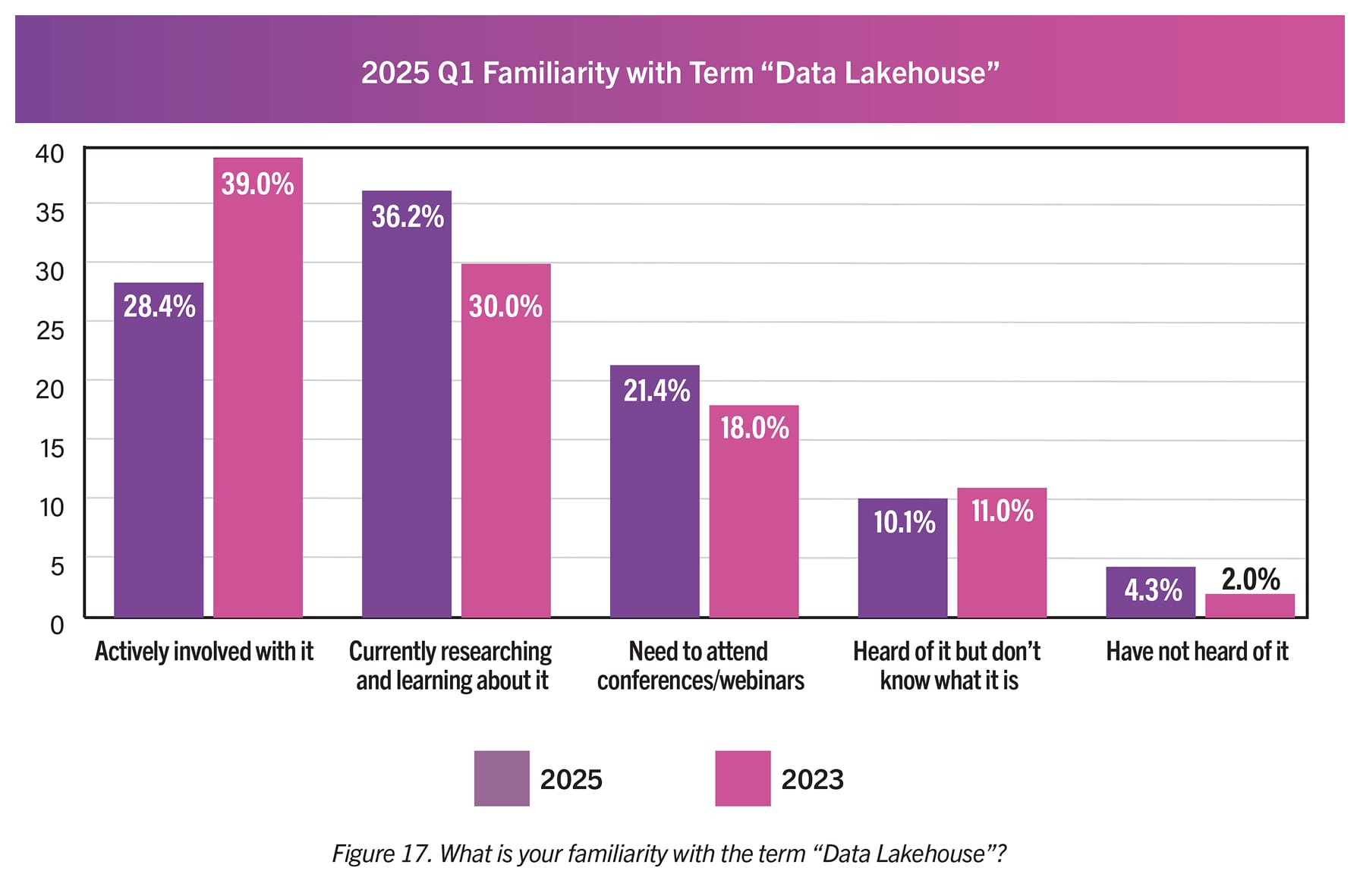

The Data Lakehouse Is a Mature, Understood, and Valued Architecture

The Data Lakehouse is not an experimental trend. The market has moved past initial hype and into deep, practical understanding. Organizations with "detailed understanding" of the Data Lakehouse skyrocketed from 4.0% in 2023 to 38.1% in 2025.

The market has matured quickly. Competitive organizations have moved beyond surface-level discussions and developed deep, practical expertise in the lakehouse. This is a proven architecture that delivers value today.

The Data Lakehouse ranks as a top-3 architecture seen as "most valuable to your company over the next 5 years," holding a strong position at 33.6%. Industry leaders are making long-term strategic bets on the Data Lakehouse as the platform that will provide sustained competitive advantage throughout the AI era.

Investment Strategy Has Shifted to Tactical, Fast-Value Projects

The era of massive, multi-year monolithic projects is fading. Budgets are smaller and focused: investments over $1M fell from 23.8% to 6.9%. Tactical budgets under $500k surged from 23.3% to 67.9%.

Companies learned that "big bang" projects create more problems than they solve. The market has shifted to focused, use-case-driven deployments that show value quickly. Modern lakehouse platforms support this approach. You can start with a single, critical AI project and scale as you prove the ROI, without a massive upfront investment.

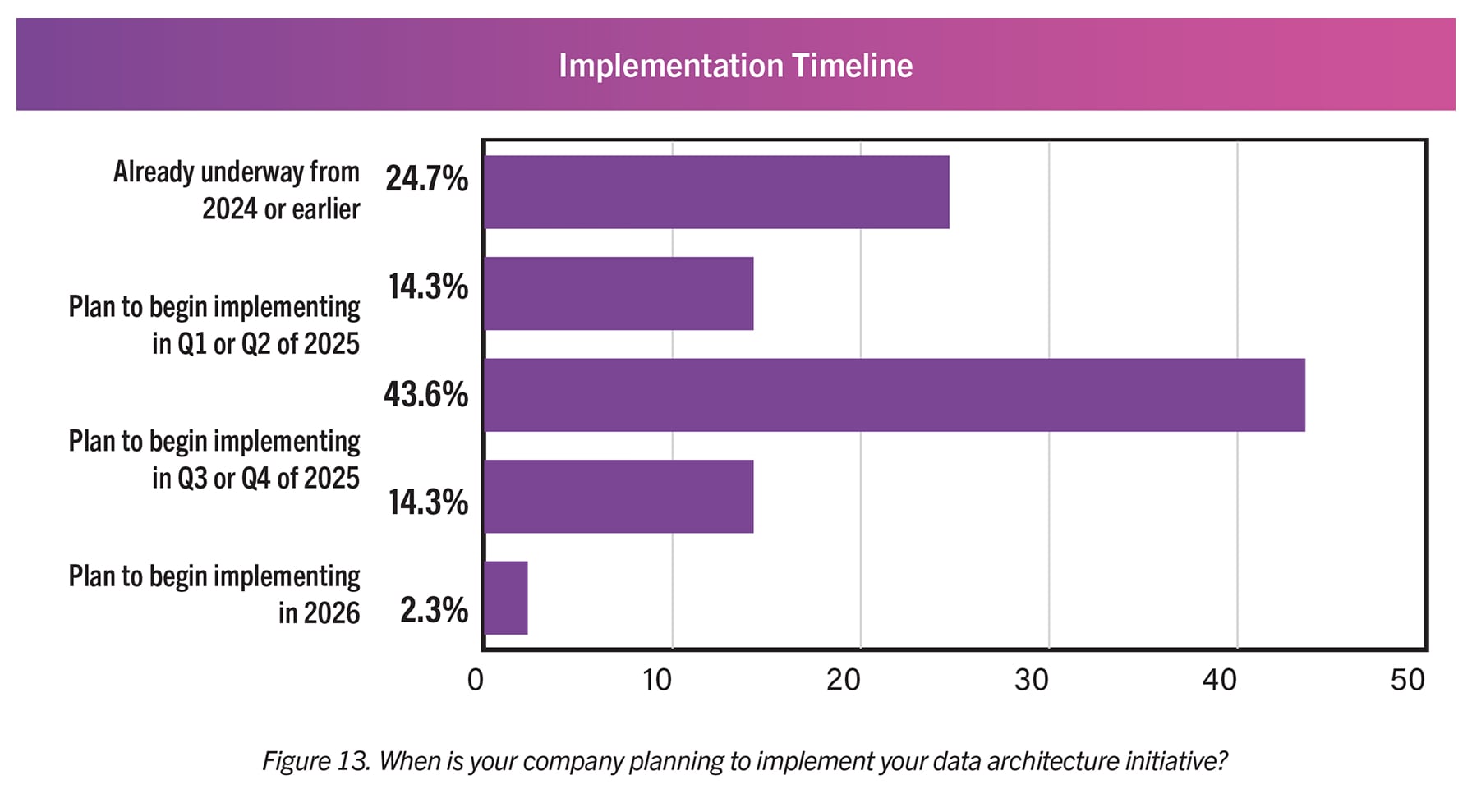

The synchronized timeline creates competitive pressure: 43.6% of organizations target Q3-Q4 2025 implementation and 82.6% plan deployment by year-end.

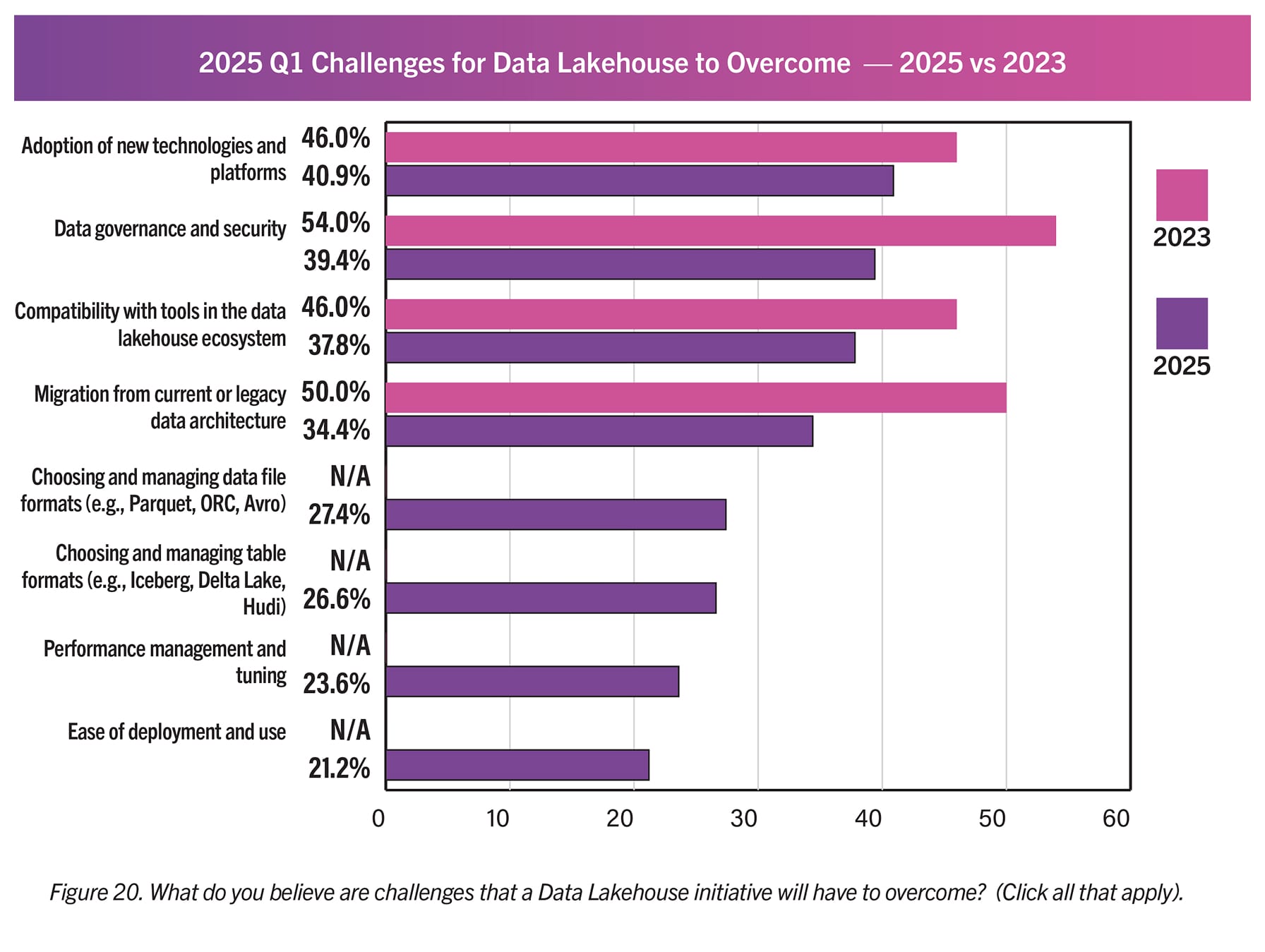

Lakehouse Implementation Challenges Have Shifted to Format and Performance Management

As companies adopt the lakehouse, their challenges have evolved from basic setup to more sophisticated technical issues.

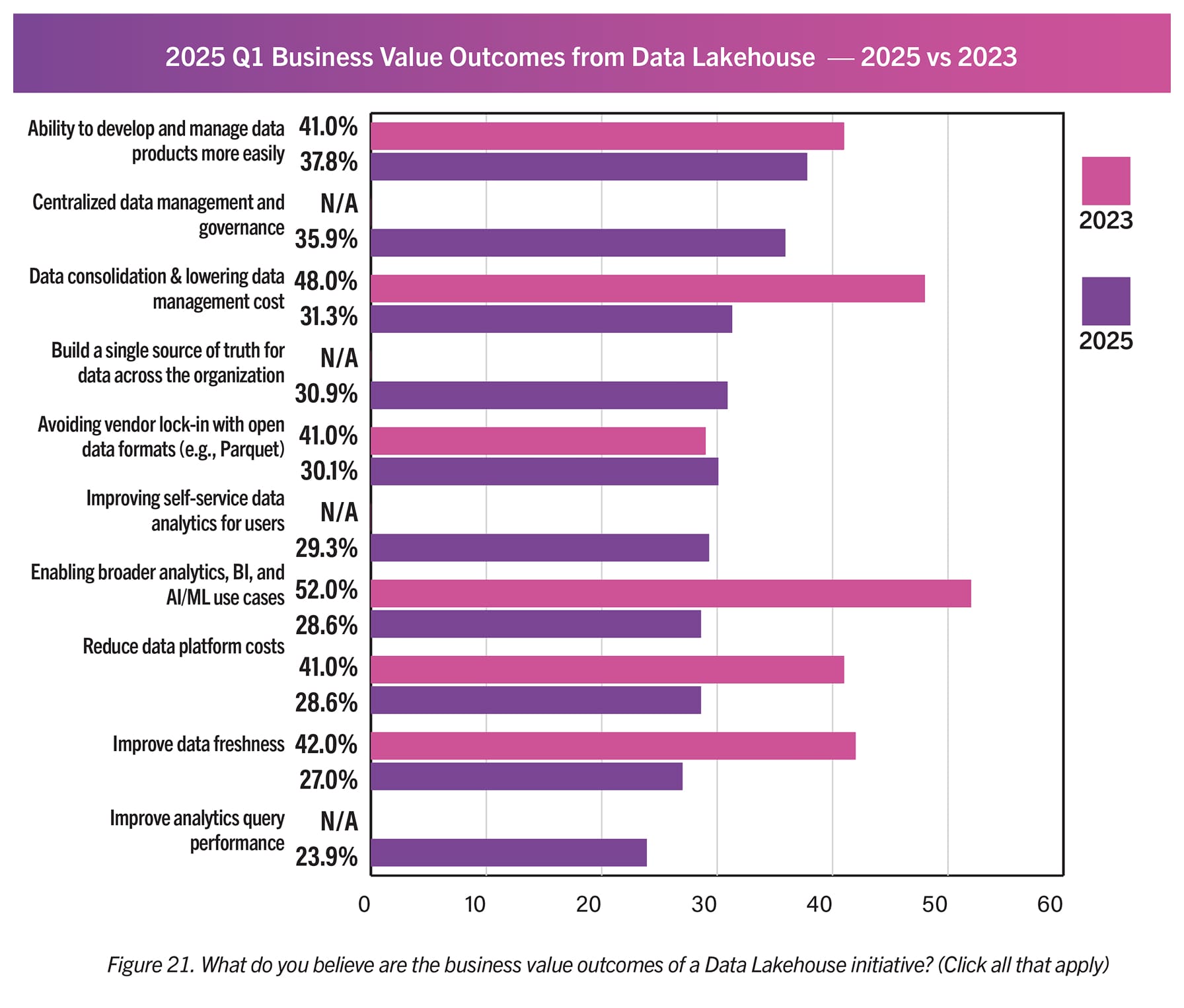

Strategic concerns like data governance have decreased (from 54.0% to 39.4%), while new, more technical challenges have emerged as primary concerns for 2025: choosing and managing data file formats (27.4%) and choosing and managing table formats such as Apache Hudi™, Apache Iceberg™, and Delta Lake (26.6%).

Once you commit to a lakehouse, the next challenges become managing the complexity of open table formats, performance tuning, and data ingestion.

Basic streaming ingestion is solved. The leading challenge is now "solving complex use cases involving real-time integration with historical data" at 47.5%.

Openness and Avoiding Lock-In Are Key Customer Concerns

When implementing a Data Lakehouse, "Avoiding vendor lock-in with open data formats" is a critical business value outcome that organizations seek (30.1%). As the most interoperable Data Lakehouse platform on the market—thanks to Apache XTable™ (incubating) and OneSync™—Onehouse addresses this concern head-on.

Organizations understand that owning your data in standard open formats such as Apache Hudi™, Apache Iceberg, or Delta Lake is critical. Your peers are actively trying to avoid vendor lock-in. With open formats, your data remains yours, forever accessible and queryable by any engine you choose.

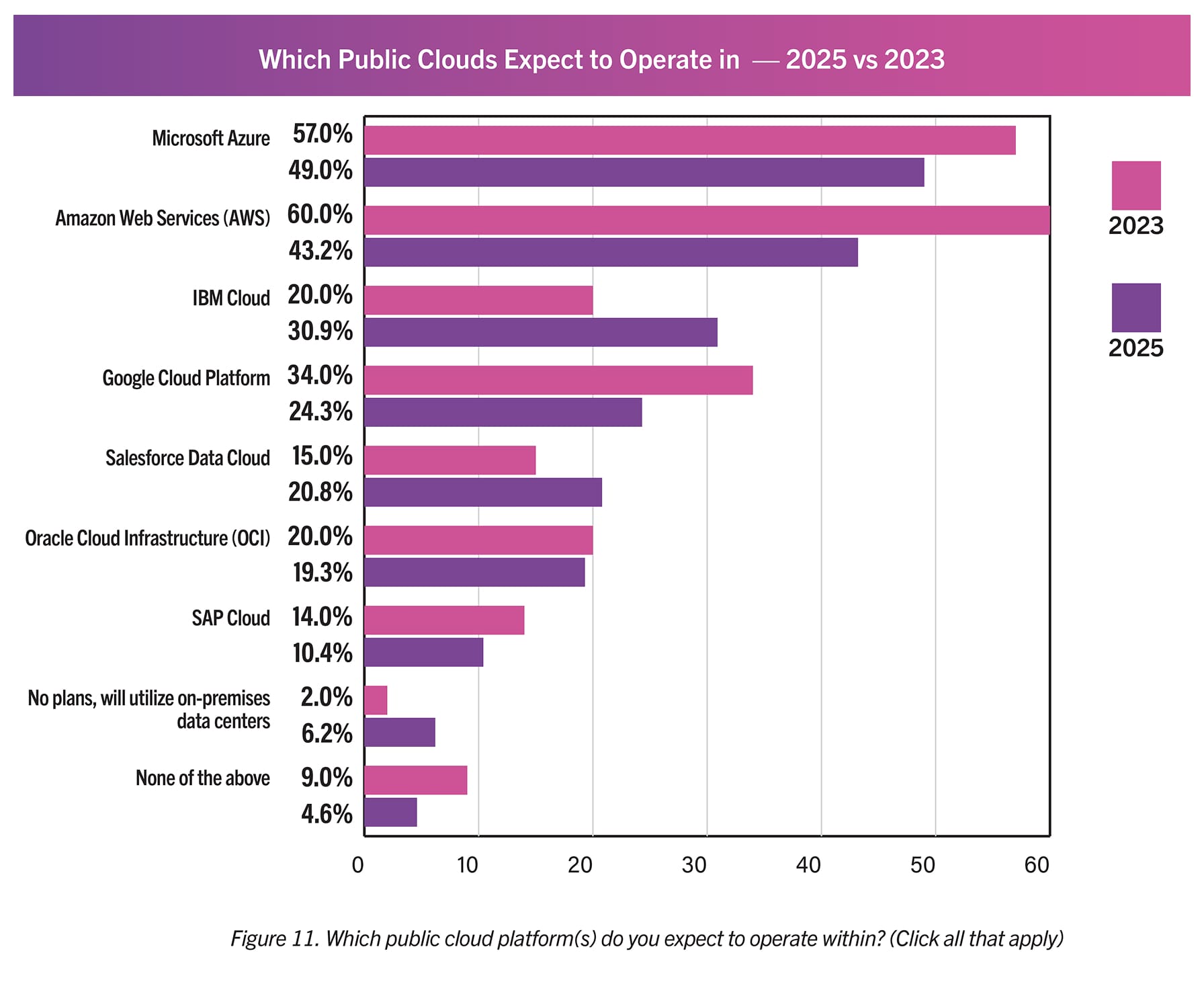

Multi-cloud adoption has increased, with organizations now selecting an average of 2.4 cloud platforms compared to 2.1 in 2023. Only 6.2% plan on-premises-only operations.

The Path Forward

Organizations are looking to build the right data foundation for an AI-centric future, and the lakehouse is their architecture of choice.

This report shows that as organizations mature, the challenges shift from strategy to the painful technical realities of implementation: managing open table formats, tuning for performance and efficiency, and stitching together real-time and historical data.

This is the critical engineering gap Onehouse was built to fill.

Instead of dedicating your best engineers to the undifferentiated heavy lifting of format compatibility, data ingestion, and performance tuning, our managed platform handles it for you. We automate the complex operations that the report identifies as the primary new bottlenecks, freeing your team to focus on the one thing that matters: building the AI applications that drive business value.

Stop wrestling with table formats and start delivering on your AI roadmap. See how Onehouse can help you launch a production-grade lakehouse in minutes, not months.

Next steps:

Read More:

Subscribe to the Blog

Be the first to read new posts