Onehouse Product Demo - Building a data lake for Github analytics at scale

After our exciting announcement of Onehouse availability on the AWS marketplace, many have been eager to see the product in action. In this blog we will take you through a real life use case and show you how quickly you can stand up an end to end analytics pipeline using Onehouse.

Onehouse product overview

Onehouse is a cloud-native, managed foundation for your data lakehouse that automatically ingests, manages and optimizes your data for orders of magnitude performance improvements.

One of the key benefits of Onehouse is that it allows customers to build data lakes in a matter of days or weeks instead of the months or years it takes with a DIY approach. By automating data management tasks and providing a fully managed platform, Onehouse frees up your time to focus on deriving insights from data, rather than managing it. This can result in significant cost savings as organizations can avoid the need to hire and train expensive data management teams. Additionally, Onehouse provides a range of enterprise security features, including encryption, access controls, and monitoring, to ensure that data is protected at all times.

Product demo setup

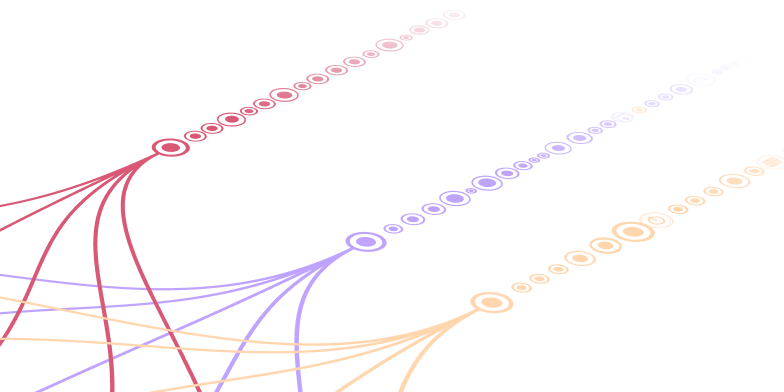

Let’s take a real life use case and show you how quickly you can build an end to end analytics pipeline using the Onehouse Managed Data Lakehouse solution. Internally we have built a data lake for Github analytics using the Onehouse product to derive useful insights on open source communities. With Onehouse I will show you how you can define your source connection, create a stream capture, and then analyze your data in real time with tools like Amazon Athena and Preset. Here is what the overall solution architecture looks like:

Github archive as the source

The data source we use for this demo is the Github Archive project, which captures all public events occurring on Github and aggregates them into hourly archives. We used the Onehouse Managed Data Lakehouse solution to pull Github archive files once every hour, load the data into a raw Amazon S3 bucket, and make it ready for further analysis.

Once available you can define this S3 bucket as a Source Connection in the Onehouse Management Console. Go to Connections -> Sources and click “Add New Source”:

In this use case, we will create a S3 data source named as "Github Historical Data":

Adding a new datasource requires only a few clicks by a user to provide the basic info such as the name of the source and the name of the S3 bucket, etc. After that you can verify the result by checking the JSON definition (Actions -> Show JSON) for the new data source:

Add an ingestion stream capture

In the Onehouse Management Console, go to Services -> Capture -> Add New Stream. Choose “Github Historical Data” as the source and follow the instructions to create an ingestion stream to load into the append-only table “githubevents” in the data lake.

Incremental ETL transformations

The githubevents table we built above is considered to be a raw layer sometimes referred to as a “bronze layer” if you follow the medallion architecture. This raw layer contains all the data ingested into the data lake in its original, unprocessed format. The data in this layer is not subject to any quality checks or transformations. Customers usually set out to build a trusted or “silver” layer next to clean, transform, and enrich the data to meet specific quality standards. The data in this layer is governed by policies to ensure data quality, security, and privacy controls to meet the business needs.

Onehouse offers an incremental ETL feature that allows for easy transformation of data in the bronze layer and subsequent loading into the silver layer. This feature includes several low-code or no-code transformation options:

- CDC log transformation

- JSON parsing

- Row filtering

- Field flattening

- Derived date column

- Data masking

- +much more

Onehouse also offers full extensibility allowing customers to bring their own custom code and libraries to represent as transformation building blocks in their pipelines. This enables your data engineers to evolve your data pipelines while also easily sharing your in-house transformation logic for your less technical peers to leverage in a low-code way.

In the Onehouse Management Console, go to Services -> Capture -> Add New Stream, choose the existing Hudi table “githubevents” in the data lake as the source. Pick the Write Mode as Mutable so that updates/deletes and de-duplication can be performed on the target table in the silver layer. For the demo, we added a row filter transformation to separate Github Issue Events, and then we flattened the JSON object of the payload that came from the Github Archive. We also configured record and pre-combine keys on the Hudi table to guarantee uniqueness and handle late arriving data with ease.

The final step in the stream capture pipeline creation is to choose which catalog you want to sync the new tables with. Onehouse supports many catalogs today and you can sync to more than one at a time:

- AWS Glue Catalog

- Hive Metastore

- GCP DataProc Metastore

- GCP BigQuery + BigLake

- DataHub

- Snowflake and Databricks via Onetable

In this demo I chose to sync to the default AWS Glue data catalog:

Click “Start Capturing” and go to Services -> Capture to confirm the new capture stream (githubissues_demo) is actually up and running.

In the Onehouse management console I can view the state and progress of all my stream captures.

Built-in data lake table optimizations

Once the stream capture is running I will now have a table created in the S3 data lake, synced to the AWS Glue data catalog, and ready for analytics from a variety of query engines. The Onehouse Management Console provides a preview of the data and statistics about the database (and this feature can be disabled at customer’s request). I now have a 1.7 Terabyte table with 3.6 Billion records loaded.

Onehouse offers comprehensive data management and built-in support for Apache Hudi’s™ advanced table optimization services such as clustering, compaction, and cleaning. These services are critical for optimizing the performance and cost-effectiveness of Hudi tables, especially in large-scale data processing environments.

In the specific use case mentioned, Onehouse leverages the inline clustering process to cluster the raw Hudi table based on the EventType column. This is a powerful feature that allows for efficient querying and analysis of large datasets, as the data is sorted based on the specified clustering keys. By clustering the data based on the EventType column, Onehouse ensures that records with the same event type are stored together, which can improve query performance and simplify downstream processing.

In addition to inline clustering, Onehouse also provides support for compaction and cleaning of Hudi tables. Compaction involves merging small files into larger base files to reduce the number of files and improve query performance. Cleaning removes expired data files from a Hudi table.

Use Amazon Athena to query

Apache Hudi is compatible with many popular query engines, including Amazon Athena. Since Hudi is designed to store data in a columnar Parquet format and optimize for query performance, it provides fast and efficient access to data for query engines like Athena. In the context of querying the Github data lake, using Hudi as the storage layer can provide several benefits. For example, Hudi can help optimize query performance by using techniques such as column pruning, predicate pushdown, and data skipping.

Since Onehouse synced all the metadata to the Glue data catalog, no extra configurations are needed to start using Athena. You can just open Athena, choose the Glue catalog and start querying the Hudi tables Onehouse has created.

For this demo example, I have developed SQL statements for two analytical use cases:

- List all the Github open issues in the last 7 calendar days for the Apache Hudi repo

- Show the total # of Github closed Issues in the 7 calendar days

Create dashboards using Preset

To visualize and explore these Github datasets, we can use Preset which is a fully-hosted cloud service that provides a hassle-free solution for using Apache Superset, an open-source data visualization and exploration platform. With Preset, users can instantly connect to popular SQL databases and cloud query engines, such as Amazon Athena, without needing to worry about managing the underlying infrastructure.

Using Preset, users can easily create interactive dashboards, charts, and graphs from their data. The platform supports a wide variety of visualization options, including heatmaps, scatterplots, and time-series charts. Users can also explore their data using SQL queries or drag-and-drop interfaces to create custom filters and pivot tables.

Conclusion

This blog has demonstrated the convenience and efficiency of the Onehouse product in establishing an end to end data pipeline for Github Archive data extraction, loading, and transformation. Using Onehouse, a new table can be generated in a data lakehouse, which can be seamlessly queried and leveraged to derive valuable insights using Amazon Athena and Preset. If you want to learn more about Onehouse and would like to give it a try, please visit the Onehouse listing on the AWS Marketplace or contact gtm@onehouse.ai.

I would like to thank my fellow Onehouse team members Rajesh Mahindra and Bhavani Sudha Saktheeswaran for their contributions to this blog.

Read More:

Subscribe to the Blog

Be the first to read new posts